About the tool. The AHP-based ontology evaluation system is an automatic decision-aiding software that evaluates ontologies based on their complex characteristics. The user decides the importance of each criterion in a simple manner, as well as the domain covered by the desired ontology. We use a hierarchical model of independent characteristics that describe ontologies. The hierarchy is used for analyzing the problem from different perspectives and at different abstraction levels. The decision is based on the concrete end-node measurements and their relative importance at more abstract levels

User's manual (pdf)

Paper: A. Groza, I. Dragoste, I. Sincai and I. Jimborean - An ontology selection and ranking system based on analytical hierarchy process, 16th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC2014), Timisoara, Romania, 22-25 September 2014 (preprint pdf) (presentation)

This section describes the pre-requisites of running the application. The application has been developed using Java SE Development Kit 7 on aWindows X64 operating system, using Eclipse IDE.

It is a desktop application, which relies on internet connection for updating ontology measurements based on their URI, but can function without an internet connection using a local ontology repository, which may not be up to date.

-

In order to run the application on a Windows operating system, you need to have a Java Runtime Environment version 1.7 or higher installed.

-

Dictionary application WordNet2.1 1 needs to be installed prior to running the ap- plication for the dictionary knowledge base access. The path of WordNet installation home directory (ex: "d:/jde/WordNet") needs to be set as a System Variable under the name WNHOME. This system variable name is set in the application as a static field in ApplicationConstants class, from where can be edited.

-

The application requires communication with a running instance of MySQL Server2, version 5.6 or higher. A local server connection (jdbc:mysql://localhost:3306/) needs to exist for the following credentials:

username - root; password - rootThe database schema ontologies needs to be imported on the above server instance from the dump file located in project project_environment/prerequisites/ontologies_database_schema.sql. The application database connection can be re-configured editing file META-INF/persistence.xml.

-

File ResourcesThe file structure of folder project environment contains the file resources needed to run the application.

- prerequisites folder contains the database dump needed for creating and loading the database prior to running the application.

- ontology evaluation files folder contains initial .pwc file which loads the AHP decision problem. It also contains problem files with pre-filled pairwise comparisons with different degrees of inconsistency (consistent1.pwc and consistent2.pwc, medium inconsistency, high inconsistency and demo file used as example in this chapter screen-shots), which can be imported using the AHP evaluation module GUI.

- local_ ontology_repo folder contains the downloaded ontology files. They are used by the system as a local backup, when the corresponding online resources are not available or internet connection is disabled.

- AHP Ontology Evaluation System folder contains the runnable java application.

- Download v.1.0

View ontology measurements.

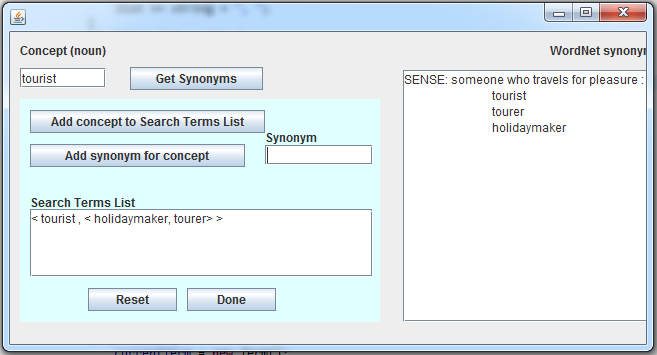

Figure 1. Computing the domain coverage.

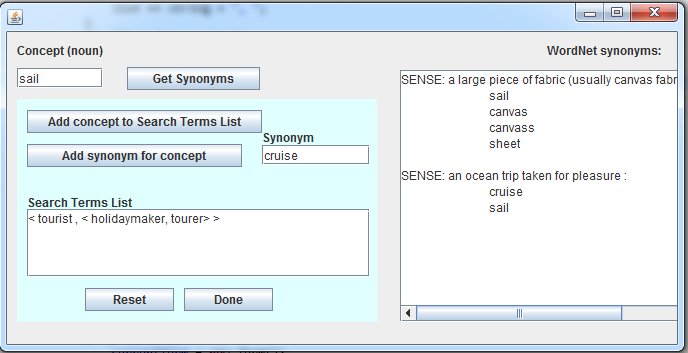

Define domain using synonyms.

Add new concepts repeating the steps above. As can be seen in Figure 2, some concepts may have different word meanings. The synonyms corresponding to the desired sense must be selected. The user can add concepts to the Search Terms List without adding synonyms for them, but this decreases the chances of finding classes corresponding to that concept.

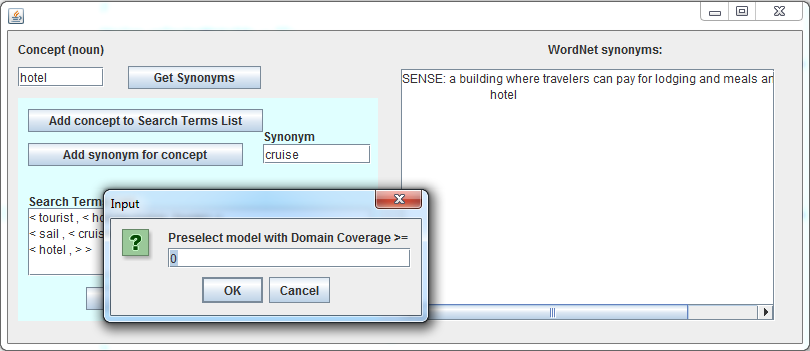

Calculate domain coverage.

Once the domain coverage processing has completed, the dialog box shown in Figure 3 is shown. The user can consult the generated DomainCoverageReport.pdf from reports folder to see the values for each ontology.

Pre-select ontologies for AHP evaluation.

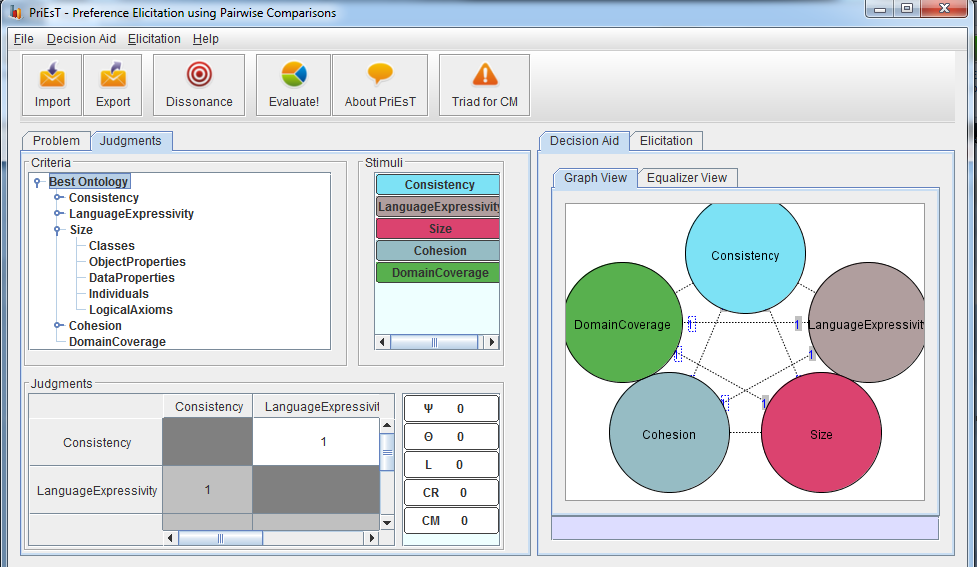

View criteria hierarchy.

Express preference judgements.

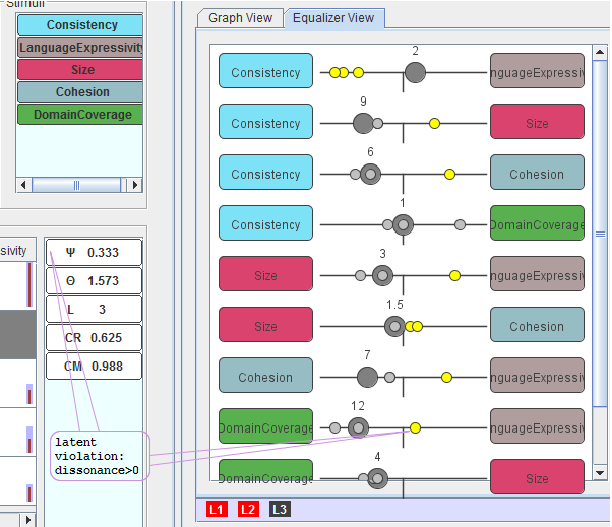

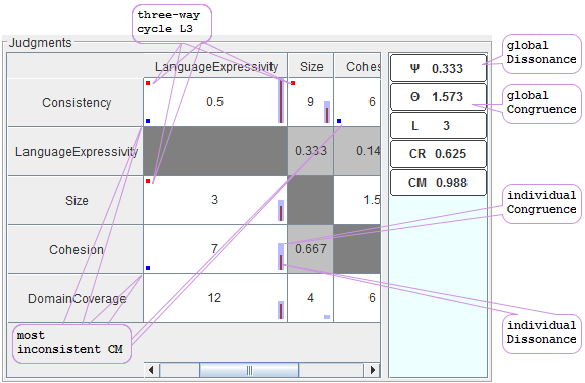

Assess preference consistency.

View evaluation results.

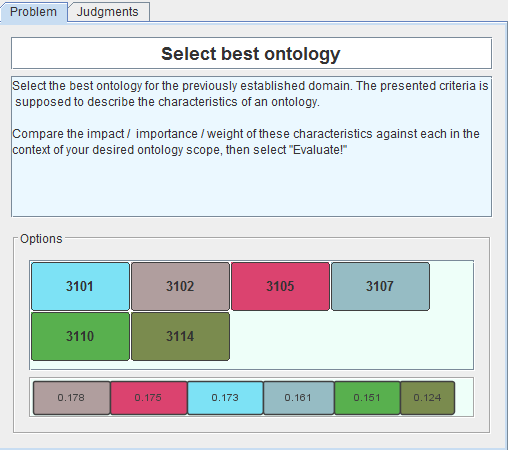

The final values for ontology alternatives (given by id) is shown in Problem tab (Figure 7). The correspondence between alternatives and evaluation values is colour-coded. This tab also contains the problem description and use guidelines.

View evaluation accuracy.

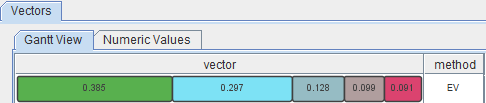

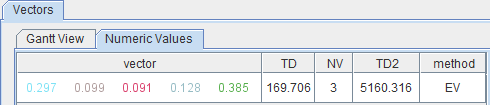

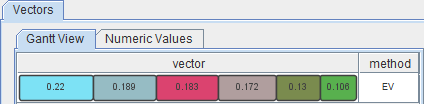

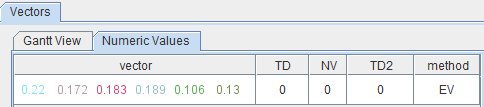

By selecting a leaf element in the Criteria panel, the elicited weights of alternatives for the corresponding Criterion can be seen in Vectors tab. The example from Figures 10 and 11 displays the alternative weights for Average Number of Sub-classes atomic criterion. As in the previous step, the relation between weight values and Stimuli is colour-coded.

Import/export .pwc files.

The application is exited by clicking the exit button in the upper right corner of the window. Unless exported, the decision problem is not saved. Before running the program again, the user is advised to save the generated reports in a different location or with a different name, as they will be overwritten.

Adrian.Groza [at] cs.utcluj.ro