Authors: Blaga Bianca-Cerasela-Zelia, Sergiu Nedevschi

The paper was submitted for publication at IEEE Transactions on Geoscience and Remote Sensing. Details can be found in this workshop presentation.

We created a large aerial dataset combining manually annotated real-world recordings and synthetic data collected with the use of simulators to solve tasks necessary for forest monitoring, such as semantic segmentation, depth estimation and scene understanding.

A. Real Dataset

Starting from the real-world recordings from WildUAV [1], we have manually annotated 4 mapping sets, resulting in dense and accurate semantic labels. We also used an automatic label propagation methodology for videos [2]. Thus we obtained over 2,600 real semantic segmentation images with a number of 9 classes present in the annotations.

The WildUAV dataset can be downloaded from this GitHub repository.

Examples of original image and semantic segmentation image pairs

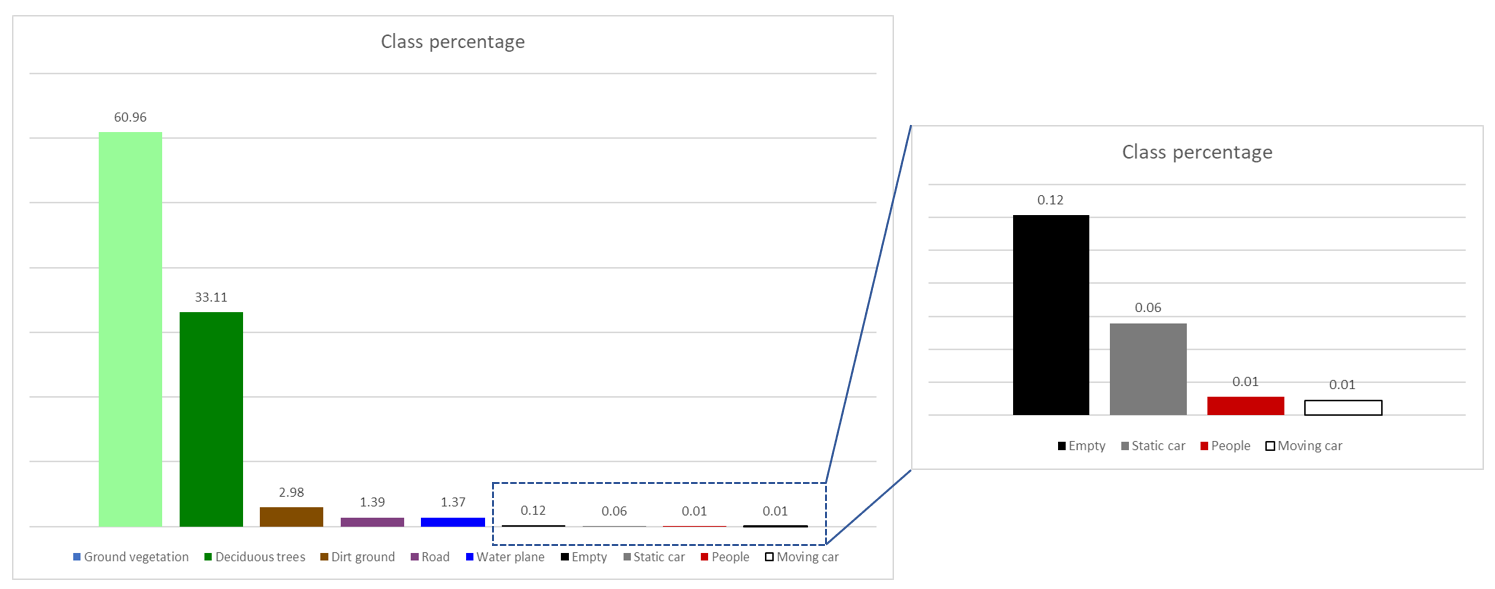

Distribution of semantic classes in the real set

Dataset contents and number of annotations for the real recordings

| Dataset | #images |

| seq00 | 244 |

| seq01 | 497 |

| seq02 | 356 |

| seq03 | 442 |

| vid02 | 1,069 |

| Total | 2,608 |

B. Synthetic Dataset

We created a virtual environment in Unreal Engine 4, using the European Forest package [3] available on the Unreal Engine Marketplace, which provides scanned, high-quality foliage textures. We also added several vehicles throughout the map, using the models provided in the Vehicle Pack [4].

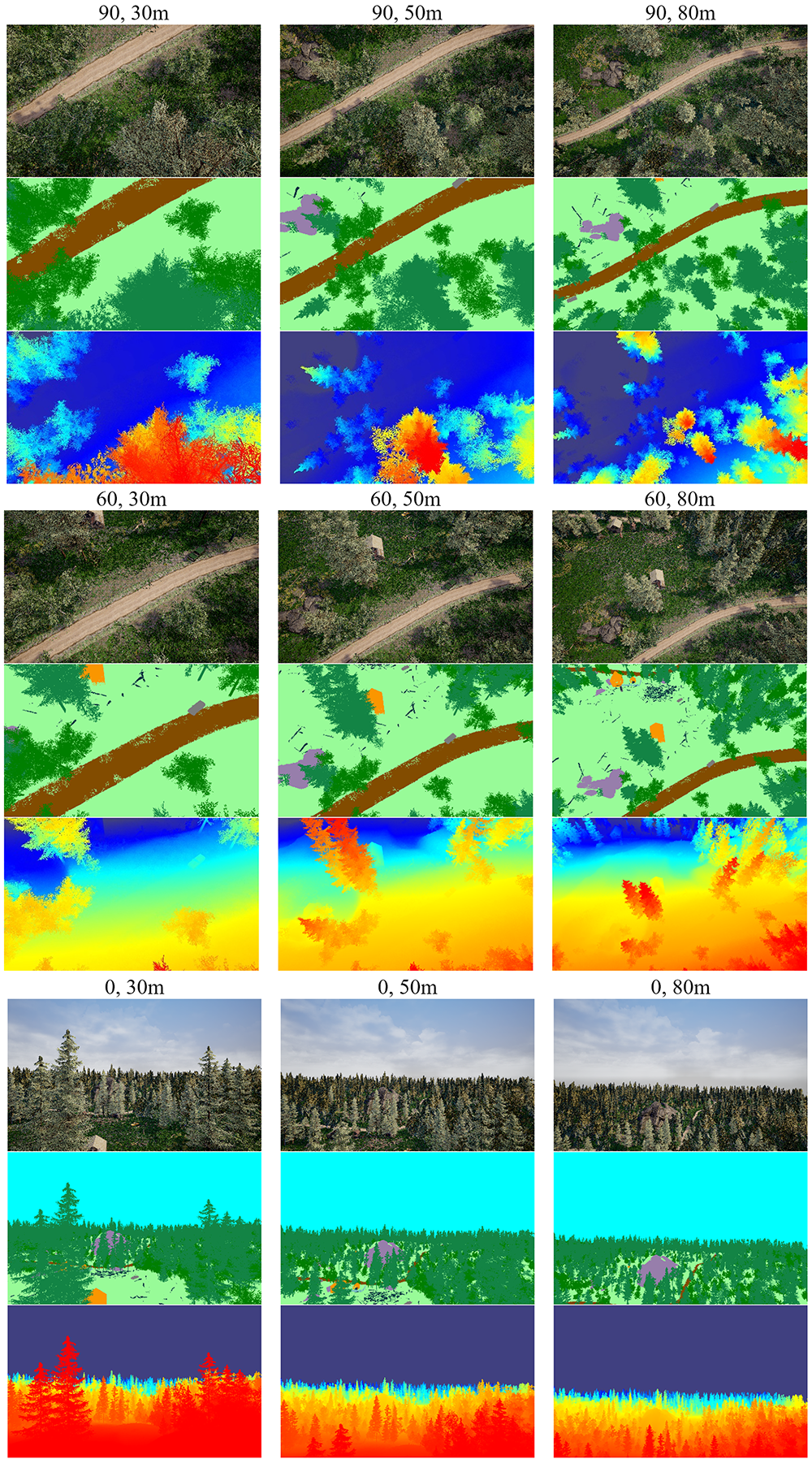

We recorded 22 sequences containing over 31,000 color images, along with semantic segmentation and depth information. A total of 11 classes are present, with the caveat that we distinguish between conifers, deciduous and fallen trees. Additional drone positioning and orientation data are provided by the AirSim [5] controller. The Forest Inspection dataset was recorded in 2 weather conditions: sunny and overcast, from altitudes of 30, 50 and 80 meters, with 3 degrees of pitch angle.

The contents will be available for download on this Dropbox link, after the paper is peer reviewed and accepted for publication.

Examples of images recorded by drone in the virtual environment, with angle and altitude variations, including color, semantic labels, and depth map information

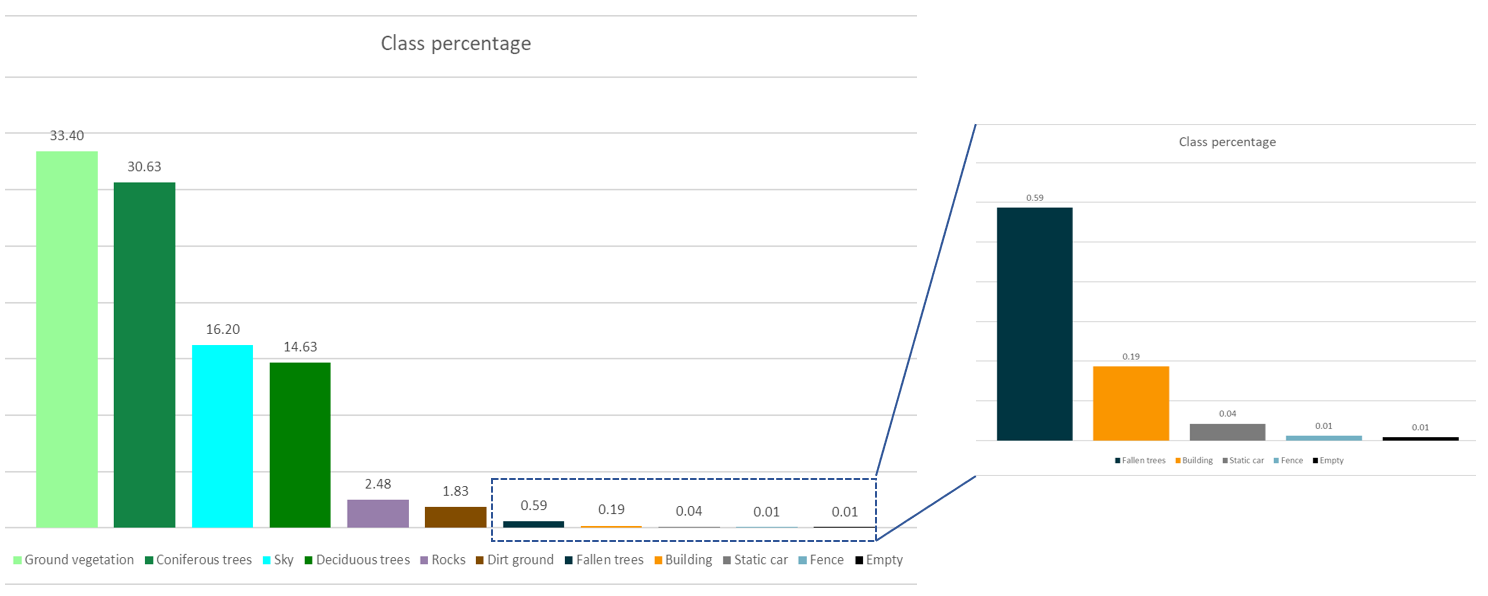

Distribution of semantic classes in the synthetic set

Dataset contents and number of annotations for the synthetic recordings

| Sunny FOV 90 | |||

| Sequence# | Pitch(deg) | Altitude(m) | #images |

| seq1 | 0 | 30 | 1,468 |

| seq2 | -60 | 30 | 1,501 |

| seq3 | -90 | 30 | 1,469 |

| seq4 | 0 | 50 | 1,432 |

| seq5 | -60 | 50 | 1,447 |

| seq6 | -90 | 50 | 1,437 |

| seq7 | 0 | 80 | 1,436 |

| seq8 | -60 | 80 | 1,464 |

| seq9 | -90 | 80 | 1,397 |

| Overcast FOV 90 | |||

| Sequence# | Pitch(deg) | Altitude(m) | #images |

| seq10 | 0 | 30 | 1,522 |

| seq11 | -60 | 30 | 1,618 |

| seq12 | -90 | 30 | 1,556 |

| seq13 | 0 | 50 | 1,398 |

| seq14 | -60 | 50 | 1,398 |

| seq15 | -90 | 50 | 1,341 |

| seq16 | 0 | 80 | 1,489 |

| seq17 | -60 | 80 | 1,471 |

| seq18 | -90 | 80 | 1,398 |

| Sunny FOV 60 | |||

| Sequence# | Pitch(deg) | Altitude(m) | #images |

| seq19 | -90 | 30 | 1,325 |

| seq20 | -90 | 30 | 1,324 |

| seq21 | -90 | 30 | 1,322 |

| seq22 | -90 | 30 | 1,309 |

| Total | 31,531 |

Color encoding of the class labels

| Class | [R, G, B] |

| Sky | [0, 255, 255] |

| Deciduous trees | [0, 127, 0] |

| Coniferous trees | [19, 132, 69] |

| Fallen trees | [0, 53, 65] |

| Dirt ground | [130, 76, 0] |

| Ground vegetation | [152, 251, 152] |

| Rocks | [151, 126, 171] |

| Water plane | [0, 0, 255] |

| Building | [250, 150, 0] |

| Fence | [115, 176, 195] |

| Road | [128, 64, 128] |

| Static car | [123, 123, 123] |

| Moving car | [255, 255, 255] |

| People | [200, 0, 0] |

| Empty | [0, 0, 0] |

References

[1] H. Florea, V.C. Miclea, S. Nedevschi, WildUAV: Monocular UAV Dataset for Depth Estimation Tasks. In Proceedings of 17th 2021 IEEE International Conference Intelligent Computer Communication and Processing (ICCP 2021).

[2] A. Marcu, D. Costea, V. Licaret and M. Leordeanu, ”Towards automatic annotation for semantic segmentation in drone videos”. arXiv preprint arXiv:1910.10026.

[3] Unreal Engine, European Forest, available online: https://www.unrealengine.com/marketplace/en-US/product/european-forest.

[4] S. S. Blueprints. (2019) Vehicle variety pack. [Online]. Available: https://www.unrealengine.com/marketplace/en-US/product/bbcb90a03f844edbb20c8b89ee16ea32.

[5] Shah, S., Dey, D., Lovett, C. and Kapoor, A., 2018. Airsim: High-fidelity visual and physical simulation for autonomous vehicles. In Field and service robotics (pp. 621-635). Springer, Cham.