Example of image sequences from the mapping dataset

The WildUAV dataset was developed for enabling research on visual perception tasks in the aerial domain, with a focus on deep learning approaches for depth estimation. The data is comprised of high-resolution RGB images, depth information and accurate positioning information. The depth and positioning ground truth was generated using photogrammetry software based on traditional Structure-from-Motion and Multi-View Stereo algorithms, which currently outperform their learning-based counterparts, at the expense of computational complexity.

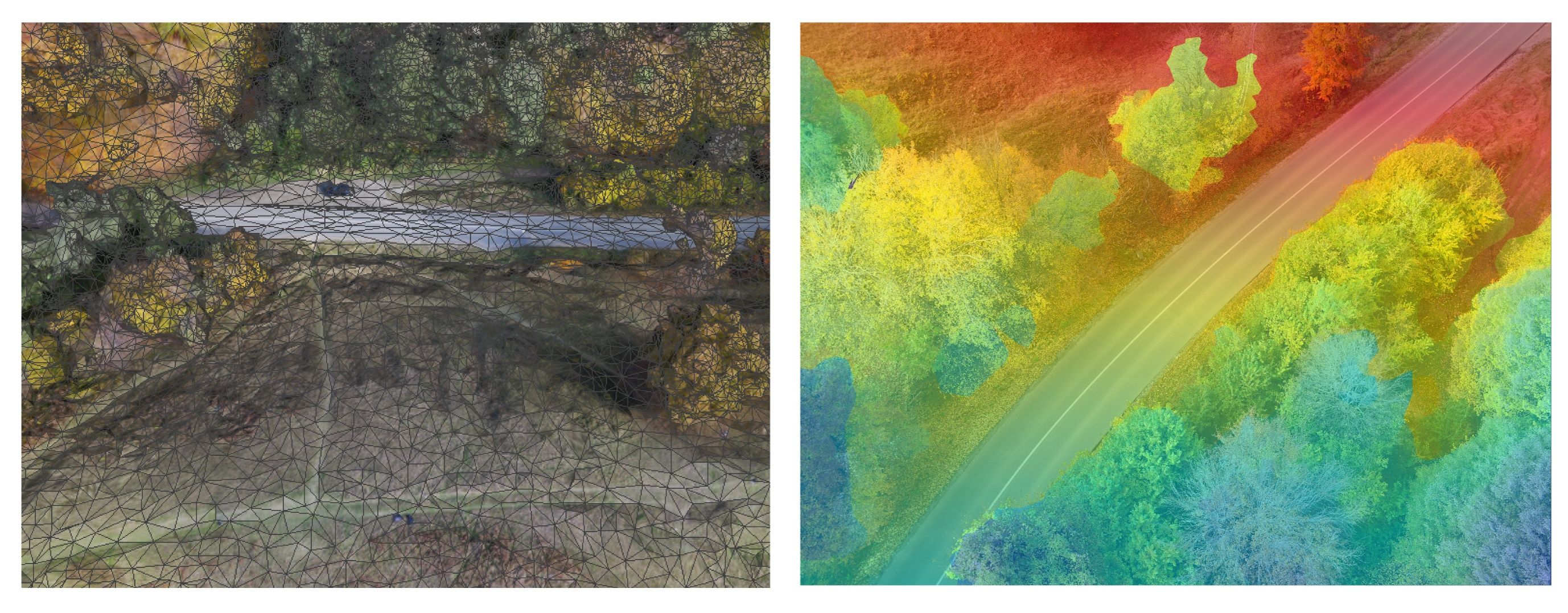

Left: Photogrammetry obtained 3D Mesh, Right: Color coded depth projected onto image

January 2022 Update: Semantic Segmentation

The WildUAV dataset’s scope has been extended to also cover semantic segmentation tasks by the inclusion of manual semantic annotations.

Examples of original image and semantic segmentation image pairs

Data description, usage license and the download links are found on the GitHub repository.

The release of this dataset is part of this published paper which also includes experiments using supervised and self-supervised Monocular Depth Estimation on the new dataset. More details about the semantic segmentation extension can be found in this workshop presentation and in the upcoming paper.